Author: admin

How to update java on centos

First of all, check your current Java version with this command:

java -version

Example:

$ java -version

java version "1.8.0_181"

Java(TM) SE Runtime Environment (build 1.8.0_181-b13)

Java HotSpot(TM) 64-Bit Server VM (build 25.181-b13, mixed mode)

If your installed version is not Java 8 update 181, then you could follow the step in order to download the latest Java version and install it:

- Download the Java JRE package for RPM-based distributions:

curl -Lo jre-8-linux-x64.rpm --header "Cookie: oraclelicense=accept-securebackup-cookie" "https://download.oracle.com/ otn-pub/java/jdk/8u181-b13/ 96a7b8442fe848ef90c96a2fad6ed6 d1/jre-8u181-linux-x64.rpm" - Check that the package was successfully downloaded:

rpm -qlp jre-8-linux-x64.rpm > /dev/null 2>&1 && echo "Java package downloaded successfully" || echo "Java package did not download successfully" - Install the package using

yum:yum -y install jre-8-linux-x64.rpm rm -f jre-8-linux-x64.rpm

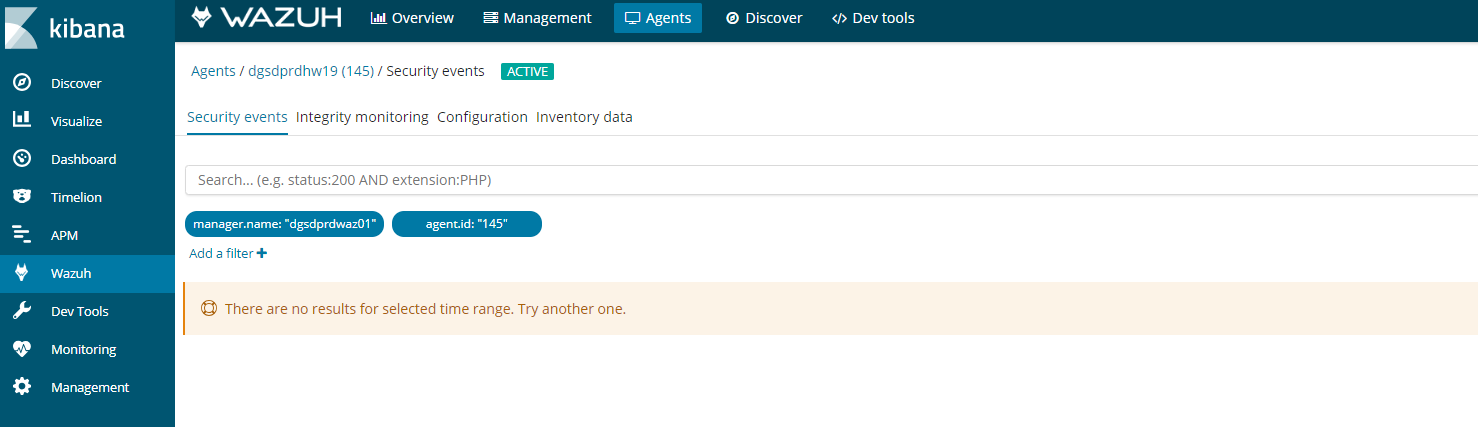

Wazuh-agent troubleshooting guide.

If you see this error in kibana on an agent. It could be for a number of reasons.

Follow this process to figure it out.

- Agent buffer on the client is full, which is caused by flood of alerts. The agents have a buffer size to keep resources on the clients consistent and minimal. If this fills up then kibana will stop collecting data.

- The first step is the easiest log into the client and restart the client by

- Systemctl restart wazuh-agent

- /etc/init.d/wazuh-agent restart

- And windows open the agent and click on restart

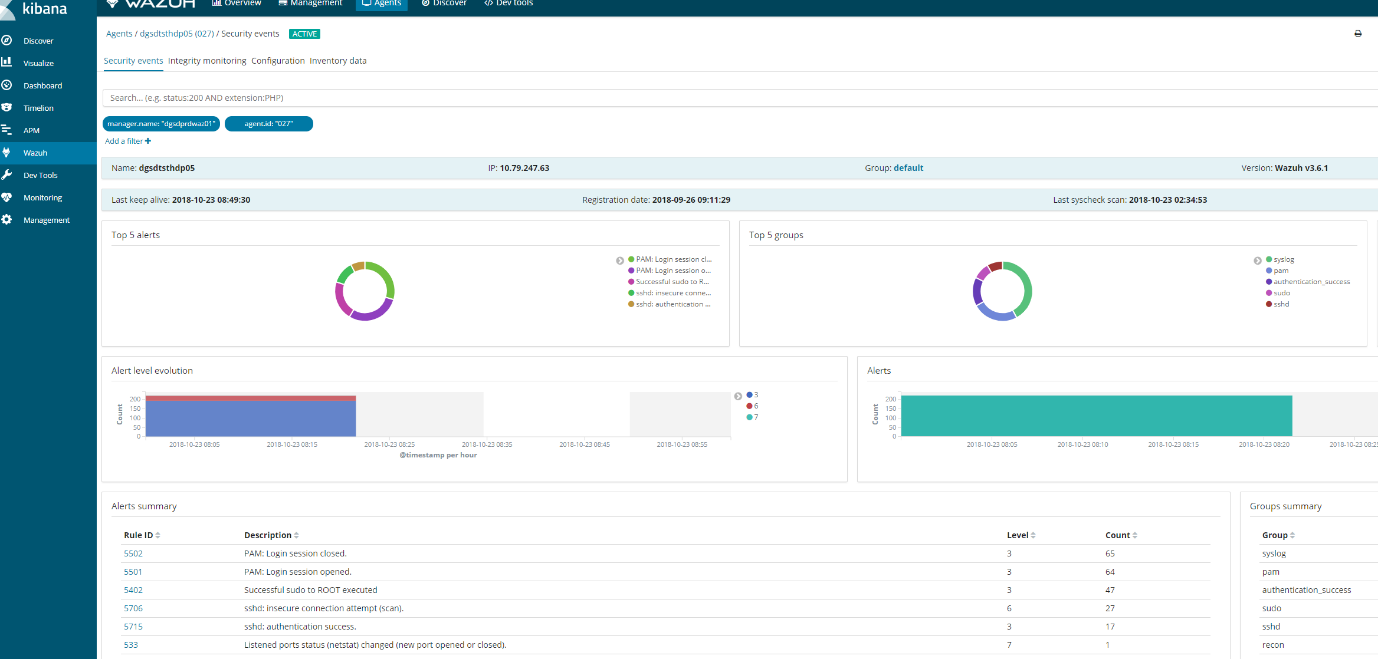

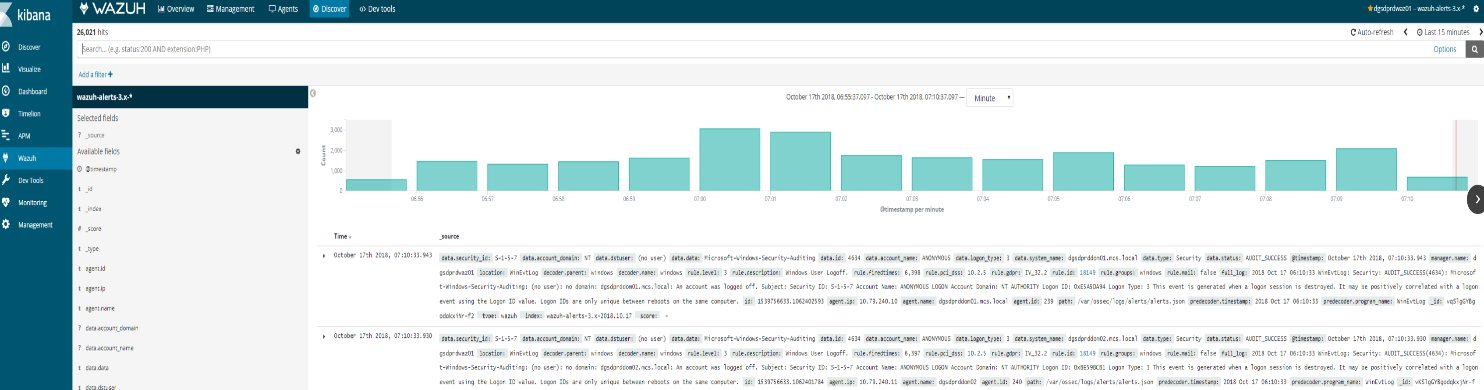

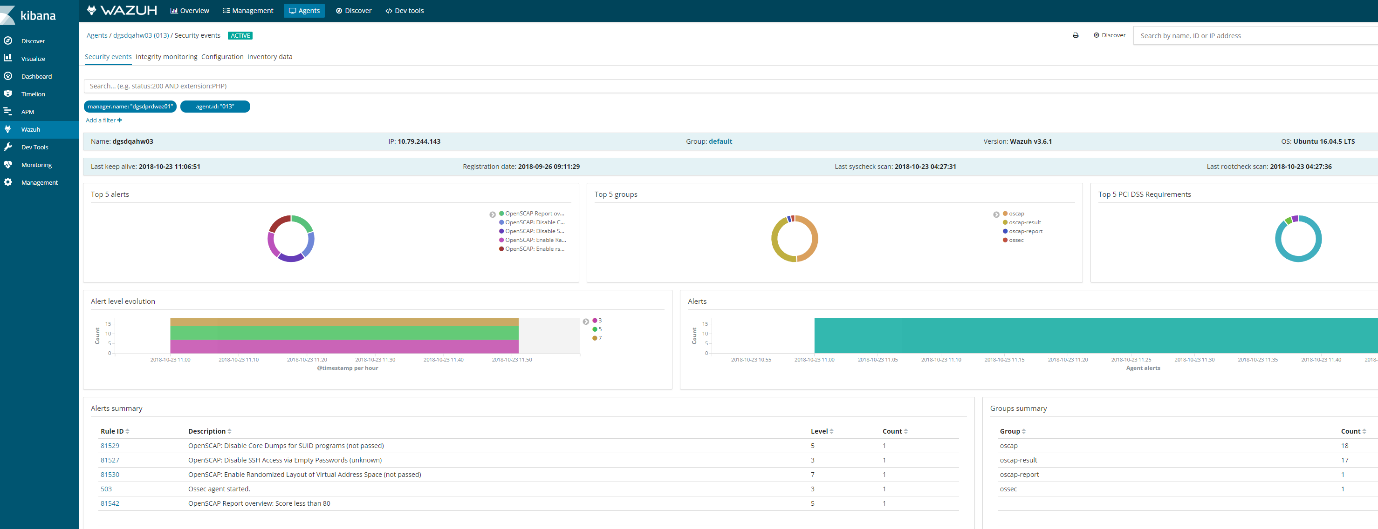

- If you go kibana

- Click on agents

- Then find your agent

- Click on a agent

- Click security audit

It should look something like this.

If this does not appear then we need to check wazuh-manager

Reason1 :Space issues

Logs can stop generating if elastic-search partition reaches 85% full and put the manager into read only mode.

# ls /usr/share/elasticsearch/data/ (lives on a different lvm)

# ls /var/ossec (lives on a different lvm)

-

- Ensure these partitions have plenty of space or wazuh will go into read only mode

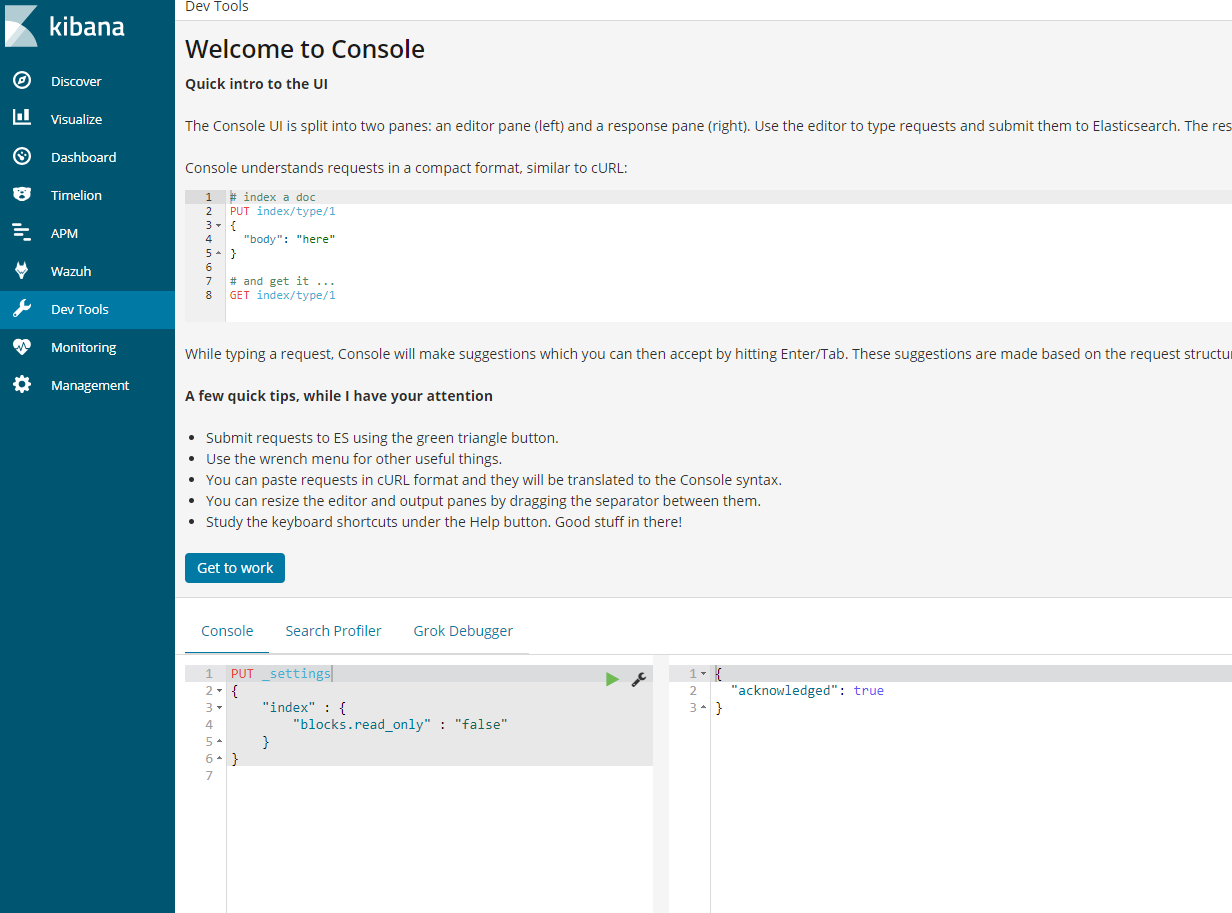

- Ones you have ensure there is adequate space you will need to execute a command in kibana to get it working again.

PUT _settings{ “index“ : { “blocks.read_only“ : “false” }}

-

- In kibana, go to dev tools and put the above code and play the code.

Alternative command that does the same thing.

- curl –XPUT ‘http://localhost:9200/_settings‘ –H ‘Content-Type: application/json’ –d‘ { “index”: { “blocks”: { “read_only_allow_delete“: “false” } } } ‘

- Next restart wazuh-manager and ossec

- /var/ossec/bin/ossec-control restart

- Systemctl restart wazuh-manager

Reason 2: Ensure services are running and check versions

- Elasticsearch:curl –XGET ‘localhost:9200’

[root@waz01~]# curl localhost:9200/_cluster/health?pretty

{

“cluster_name” : “elasticsearch“,

“status” : “yellow”,

“timed_out” : false,

“number_of_nodes” : 1,

“number_of_data_nodes” : 1,

“active_primary_shards” : 563,

“active_shards” : 563,

“relocating_shards” : 0,

“initializing_shards” : 0,

“unassigned_shards” : 547,

“delayed_unassigned_shards” : 0,

“number_of_pending_tasks” : 0,

“number_of_in_flight_fetch” : 0,

“task_max_waiting_in_queue_millis” : 0,

“active_shards_percent_as_number” : 50.72072072072073

}

- Kibana:/usr/share/kibana/bin/kibana –V

[root@waz01 ~]# /usr/share/kibana/bin/kibana -V

6.4.0Logstash:/usr/share/logstash/bin/logstash –V

[root@waz01 ~]# /usr/share/logstash/bin/logstash -V

logstash 6.4.2

-

- Check to see if wazuh-manager and logstash are running

- systemctl status wazuh–manager

Active and working

[root@waz01 ~]#systemctl status wazuh-manager

● wazuh-manager.service – Wazuh manager

Loaded: loaded (/etc/systemd/system/wazuh-manager.service; enabled; vendor preset: disabled)

Active: active (running) since Thu 2018-10-18 12:25:53 BST; 4 days ago

Process: 4488 ExecStop=/usr/bin/env ${DIRECTORY}/bin/ossec-control stop (code=exited, status=0/SUCCESS)

Process: 4617 ExecStart=/usr/bin/env ${DIRECTORY}/bin/ossec-control start (code=exited, status=0/SUCCESS)

CGroup: /system.slice/wazuh-manager.service

├─4635 /var/ossec/bin/ossec-authd

├─4639 /var/ossec/bin/wazuh-db

├─4656 /var/ossec/bin/ossec-execd

├─4662 /var/ossec/bin/ossec-analysisd

├─4666 /var/ossec/bin/ossec-syscheckd

├─4672 /var/ossec/bin/ossec-remoted

├─4675 /var/ossec/bin/ossec-logcollector

├─4695 /var/ossec/bin/ossec-monitord

└─4699 /var/ossec/bin/wazuh-modulesd

Oct 18 12:25:51 waz01env[4617]: Started wazuh-db…

Oct 18 12:25:51 waz01env[4617]: Started ossec-execd…

Oct 18 12:25:51 waz01env[4617]: Started ossec-analysisd…

Oct 18 12:25:51 waz01env[4617]: Started ossec-syscheckd…

Oct 18 12:25:51 waz01env[4617]: Started ossec-remoted…

Oct 18 12:25:51 waz01env[4617]: Started ossec-logcollector…

Oct 18 12:25:51 waz01env[4617]: Started ossec-monitord…

Oct 18 12:25:51 waz01env[4617]: Started wazuh-modulesd…

Oct 18 12:25:53 waz01env[4617]: Completed.

Oct 18 12:25:53 waz01systemd[1]: Started Wazuh manager.

- systemctl status logstash

Active and working

[root@waz01~]#systemctl status logstash

● logstash.service – logstash

Loaded: loaded (/etc/systemd/system/logstash.service; enabled; vendor preset: disabled)

Active: active (running) since Mon 2018-10-15 23:44:21 BST; 1 weeks 0 days ago

Main PID: 11924 (java)

CGroup: /system.slice/logstash.service

└─11924 /bin/java -Xms1g -Xmx1g –XX:+UseParNewGC -XX:+UseConcMarkSweepGC –XX:CMSInitiatingOccupancyFraction=75 -XX:+UseCMSInitiatingOccupancyOnly –Djava.awt.headless=true –Dfile.encoding=UTF-8 –Djruby.compile.invokedynamic=true –Djruby.jit.threshold=0 -XX:+HeapDumpOnOutOfMemoryError –Djava.security.egd=file:/dev/urandom -cp /usr/share/logstash/logstash-core/lib/jars/animal-sniffer-annotations-1.14.jar:/usr/share/logstash/logstash-core/lib/jars/commons-codec-1.11.jar:/u…

Oct 15 23:44:41 waz01logstash[11924]: [2018-10-15T23:44:41,581][WARN ][logstash.outputs.elasticsearch] Detected a 6.x and above cluster: the `type` event field won’t be used to determine the document _type {:es_version=>6}

Oct 15 23:44:41 waz01logstash[11924]: [2018-10-15T23:44:41,604][INFO ][logstash.outputs.elasticsearch] New Elasticsearch output {:class=>”LogStash::Outputs::ElasticSearch“, :hosts=>[“//localhost:9200”]}

Oct 15 23:44:41 waz01logstash[11924]: [2018-10-15T23:44:41,616][INFO ][logstash.outputs.elasticsearch] Using mapping template from {:path=>nil}

Oct 15 23:44:41 waz01logstash[11924]: [2018-10-15T23:44:41,641][INFO ][logstash.outputs.elasticsearch] Attempting to install template {:manage_template=>{“template”=>”logstash-*”, “version”=>60001, “settings”=>{“index.refresh_interval“=>”5s”}, “mappings”=>{“_default_”=>{“dynamic_templates”=>[{“message_field”=>{“path_match”=>”mess

Oct 15 23:44:41 waz01logstash[11924]: [2018-10-15T23:44:41,662][INFO ][logstash.filters.geoip ] Using geoip database {:path=>”/usr/share/logstash/vendor/bundle/jruby/2.3.0/gems/logstash-filter-geoip-5.0.3-java/vendor/GeoLite2-City.mmdb”}

Oct 15 23:44:41 waz01logstash[11924]: [2018-10-15T23:44:41,925][INFO ][logstash.inputs.file ] No sincedb_path set, generating one based on the “path” setting {:sincedb_path=>”/var/lib/logstash/plugins/inputs/file/.sincedb_b6991da130c0919d87fbe36c3e98e363″, :path=>[“/var/ossec/logs/alerts/alerts.json“]}

Oct 15 23:44:41 waz01logstash[11924]: [2018-10-15T23:44:41,968][INFO ][logstash.pipeline ] Pipeline started successfully {:pipeline_id=>”main”, :thread=>”#<Thread:0x63e37301 sleep>”}

Oct 15 23:44:42 waz01logstash[11924]: [2018-10-15T23:44:42,013][INFO ][logstash.agent ] Pipelines running {:count=>1, :running_pipelines=>[:main], :non_running_pipelines=>[]}

Oct 15 23:44:42 waz01logstash[11924]: [2018-10-15T23:44:42,032][INFO ][filewatch.observingtail ] START, creating Discoverer, Watch with file and sincedb collections

Oct 15 23:44:42 waz01logstash[11924]: [2018-10-15T23:44:42,288][INFO ][logstash.agent ] Successfully started Logstash API endpoint {:port=>9600}

If any of these are failed restart them.

- systemctl restart logstashsystemctl restart elasticsearchsystemctl restart wazuh-manger

Reason 3: Logstash is broken

- Check the logs for errors.

- tail /var/log/logstash/logstash-plain.log

Possible error#1 :

[root@waz01 ~]# tail /var/log/logstash/logstash-plain.log

[2018-10-09T17:37:59,475][INFO ][logstash.outputs.elasticsearch] Retrying individual bulk actions that failed or were rejected by the previous bulk request. {:count=>1}

[2018-10-09T17:37:59,475][INFO ][logstash.outputs.elasticsearch] retrying failed action with response code: 403 ({“type”=>”cluster_block_exception“, “reason”=>”blocked by: [FORBIDDEN/12/index read-only / allow delete (api)];”})

[2018-10-09T17:37:59,475][INFO ][logstash.outputs.elasticsearch] retrying failed action with response code: 403 ({“type”=>”cluster_block_exception“, “reason”=>”blocked by: [FORBIDDEN/12/index read-only / allow delete (api)];”})

[2018-10-09T17:37:59,475][INFO ][logstash.outputs.elasticsearch] Retrying individual bulk actions that failed or were rejected by the previous bulk request. {:count=>2}

[2018-10-09T17:37:59,475][INFO ][logstash.outputs.elasticsearch] retrying failed action with response code: 403 ({“type”=>”cluster_block_exception“, “reason”=>”blocked by: [FORBIDDEN/12/index read-only / allow delete (api)];”})

[2018-10-09T17:37:59,475][INFO ][logstash.outputs.elasticsearch] Retrying individual bulk actions that failed or were rejected by the previous bulk request. {:count=>1}

[2018-10-09T17:37:59,475][INFO ][logstash.outputs.elasticsearch] Retrying individual bulk actions that failed or were rejected by the previous bulk request. {:count=>2}

[2018-10-09T17:37:59,475][INFO ][logstash.outputs.elasticsearch] Retrying individual bulk actions that failed or were rejected by the previous bulk request. {:count=>3}

[2018-10-09T17:37:59,476][INFO ][logstash.outputs.elasticsearch] retrying failed action with response code: 403 ({“type”=>”cluster_block_exception“, “reason”=>”blocked by: [FORBIDDEN/12/index read-only / allow delete (api)];”})

[2018-10-09T17:37:59,476][INFO ][logstash.outputs.elasticsearch] Retrying individual bulk actions that failed or were rejected by the previous bulk request. {:count=>1}

Possible error#2 :

[2018-10-15T20:06:10,967][ERROR][org.logstash.Logstash ] java.lang.IllegalStateException: Logstash stopped processing because of an error: (SystemExit) exit

[2018-10-15T20:06:26,863][FATAL][logstash.runner ] An unexpected error occurred! {:error=>#<ArgumentError: Path “/var/lib/logstash/queue” must be a writable directory. It is not writable.>, :backtrace=>[“/usr/share/logstash/logstash-core/lib/logstash/settings.rb:447:in `validate'”, “/usr/share/logstash/logstash-core/lib/logstash/settings.rb:229:in `validate_value‘”, “/usr/share/logstash/logstash-core/lib/logstash/settings.rb:140:in `block in validate_all‘”, “org/jruby/RubyHash.java:1343:in `each'”, “/usr/share/logstash/logstash-core/lib/logstash/settings.rb:139:in `validate_all‘”, “/usr/share/logstash/logstash-core/lib/logstash/runner.rb:278:in `execute'”, “/usr/share/logstash/vendor/bundle/jruby/2.3.0/gems/clamp-0.6.5/lib/clamp/command.rb:67:in `run'”, “/usr/share/logstash/logstash-core/lib/logstash/runner.rb:237:in `run'”, “/usr/share/logstash/vendor/bundle/jruby/2.3.0/gems/clamp-0.6.5/lib/clamp/command.rb:132:in `run'”, “/usr/share/logstash/lib/bootstrap/environment.rb:73:in `<main>'”]}

[2018-10-15T20:06:26,878][ERROR][org.logstash.Logstash ] java.lang.IllegalStateException: Logstash stopped processing because of an error: (SystemExit) exit

[2018-10-15T20:06:42,543][FATAL][logstash.runner ] An unexpected error occurred! {:error=>#<ArgumentError: Path “/var/lib/logstash/queue” must be a writable directory. It is not writable.>, :backtrace=>[“/usr/share/logstash/logstash-core/lib/logstash/settings.rb:447:in `validate'”, “/usr/share/logstash/logstash-core/lib/logstash/settings.rb:229:in `validate_value‘”, “/usr/share/logstash/logstash-core/lib/logstash/settings.rb:140:in `block in validate_all‘”, “org/jruby/RubyHash.java:1343:in `each'”, “/usr/share/logstash/logstash-core/lib/logstash/settings.rb:139:in `validate_all‘”, “/usr/share/logstash/logstash-core/lib/logstash/runner.rb:278:in `execute'”, “/usr/share/logstash/vendor/bundle/jruby/2.3.0/gems/clamp-0.6.5/lib/clamp/command.rb:67:in `run'”, “/usr/share/logstash/logstash-core/lib/logstash/runner.rb:237:in `run'”, “/usr/share/logstash/vendor/bundle/jruby/2.3.0/gems/clamp-0.6.5/lib/clamp/command.rb:132:in `run'”, “/usr/share/logstash/lib/bootstrap/environment.rb:73:in `<main>'”]}

[2018-10-15T20:06:42,557][ERROR][org.logstash.Logstash ] java.lang.IllegalStateException: Logstash stopped processing because of an error: (SystemExit) exit

[2018-10-15T20:06:58,344][FATAL][logstash.runner ] An unexpected error occurred! {:error=>#<ArgumentError: Path “/var/lib/logstash/queue” must be a writable directory. It is not writable.>, :backtrace=>[“/usr/share/logstash/logstash-core/lib/logstash/settings.rb:447:in `validate'”, “/usr/share/logstash/logstash-core/lib/logstash/settings.rb:229:in `validate_value‘”, “/usr/share/logstash/logstash-core/lib/logstash/settings.rb:140:in `block in validate_all‘”, “org/jruby/RubyHash.java:1343:in `each'”, “/usr/share/logstash/logstash-core/lib/logstash/settings.rb:139:in `validate_all‘”, “/usr/share/logstash/logstash-core/lib/logstash/runner.rb:278:in `execute'”, “/usr/share/logstash/vendor/bundle/jruby/2.3.0/gems/clamp-0.6.5/lib/clamp/command.rb:67:in `run'”, “/usr/share/logstash/logstash-core/lib/logstash/runner.rb:237:in `run'”, “/usr/share/logstash/vendor/bundle/jruby/2.3.0/gems/clamp-0.6.5/lib/clamp/command.rb:132:in `run'”, “/usr/share/logstash/lib/bootstrap/environment.rb:73:in `<main>'”]}

[2018-10-15T20:06:58,359][ERROR][org.logstash.Logstash ] java.lang.IllegalStateException: Logstash stopped processing because of an error: (SystemExit) exi

Probably need to reinstall logstash

1. Stop affected services:

# systemctl stop logstash# systemctl stop filebeat (this should not be installed on a stand alone setup as it causes performance issues.

2. Remove Filebeat

# yum remove filebeat

3. Setting up Logstash

# curl -so /etc/logstash/conf.d/01-wazuh.conf https://raw.githubusercontent.com/wazuh/wazuh/3.6/extensions/logstash/01-wazuh-local.conf# usermod -a -G osseclogstash

- Next step is to correct folder owner for certain Logstash directories:

# chown -R logstash:logstash /usr/share/logstash# chown -R logstash:logstash /var/lib/logstash

Note: if logstash still shows writing issues in the logs increase the permissions to

- chmod -R 766 /usr/share/logstash

- systemctl restart logstash

Now restart Logstash:

# systemctl restart logstash

5. Restart Logstash & run the curl command to ensure its not readonly.

- # systemctl restart logstash

- curl –XPUT ‘http://localhost:9200/_settings‘ –H ‘Content-Type: application/json’ –d‘ { “index”: { “blocks”: { “read_only_allow_delete“: “false” } } } ‘

- 6. Now check again your Logstash log file:

6. Now check again your Logstash log file:

# cat /var/log/logstash/logstash-plain.log | grep –i -E “(error|warning|critical)”

Hopefully you see no errors being generated

Next check the plain log

- tail -10 /var/log/logstash/logstash-plain.log

Good log output:

[root@waz01~]# tail -10 /var/log/logstash/logstash-plain.log

[2018-10-15T23:44:41,581][WARN ][logstash.outputs.elasticsearch] Detected a 6.x and above cluster: the `type` event field won’t be used to determine the document _type {:es_version=>6}

[2018-10-15T23:44:41,604][INFO ][logstash.outputs.elasticsearch] New Elasticsearch output {:class=>”LogStash::Outputs::ElasticSearch“, :hosts=>[“//localhost:9200”]}

[2018-10-15T23:44:41,616][INFO ][logstash.outputs.elasticsearch] Using mapping template from {:path=>nil}

[2018-10-15T23:44:41,641][INFO ][logstash.outputs.elasticsearch] Attempting to install template {:manage_template=>{“template”=>”logstash-*”, “version”=>60001, “settings”=>{“index.refresh_interval“=>”5s”}, “mappings”=>{“_default_”=>{“dynamic_templates”=>[{“message_field”=>{“path_match”=>”message”, “match_mapping_type“=>”string”, “mapping”=>{“type”=>”text”, “norms”=>false}}}, {“string_fields“=>{“match”=>”*”, “match_mapping_type“=>”string”, “mapping”=>{“type”=>”text”, “norms”=>false, “fields”=>{“keyword”=>{“type”=>”keyword”, “ignore_above“=>256}}}}}], “properties”=>{“@timestamp”=>{“type”=>”date”}, “@version”=>{“type”=>”keyword”}, “geoip“=>{“dynamic”=>true, “properties”=>{“ip“=>{“type”=>”ip“}, “location”=>{“type”=>”geo_point“}, “latitude”=>{“type”=>”half_float“}, “longitude”=>{“type”=>”half_float“}}}}}}}}

[2018-10-15T23:44:41,662][INFO ][logstash.filters.geoip ] Using geoip database {:path=>”/usr/share/logstash/vendor/bundle/jruby/2.3.0/gems/logstash-filter-geoip-5.0.3-java/vendor/GeoLite2-City.mmdb”}

[2018-10-15T23:44:41,925][INFO ][logstash.inputs.file ] No sincedb_path set, generating one based on the “path” setting {:sincedb_path=>”/var/lib/logstash/plugins/inputs/file/.sincedb_b6991da130c0919d87fbe36c3e98e363″, :path=>[“/var/ossec/logs/alerts/alerts.json“]}

[2018-10-15T23:44:41,968][INFO ][logstash.pipeline ] Pipeline started successfully {:pipeline_id=>”main”, :thread=>”#<Thread:0x63e37301 sleep>”}

[2018-10-15T23:44:42,013][INFO ][logstash.agent ] Pipelines running {:count=>1, :running_pipelines=>[:main], :non_running_pipelines=>[]}

[2018-10-15T23:44:42,032][INFO ][filewatch.observingtail ] START, creating Discoverer, Watch with file and sincedb collections

[2018-10-15T23:44:42,288][INFO ][logstash.agent ] Successfully started Logstash API endpoint {:port=>9600}

Now that we have all clear, let’s check component by component:

1. Check last 10 alerts generated in your Wazuh manager. Also, check the field timestamp, we must take care about the timestamp.

tail –10 /var/ossec/logs/alerts/alerts.json

2. If the Wazuh manager is generating alerts from your view (step 1), then let’s check if Logstash is reading our alerts. You should see two processes: java for Logstash and ossec-ana from Wazuh.

# lsof /var/ossec/logs/alerts/alerts.json (ossec-ana & java should be running if not restart ossec)

[root@waz01~]#lsof /var/ossec/logs/alerts/alerts.json

COMMAND PID USER FD TYPE DEVICE SIZE/OFF NODE NAME

ossec-ana 4662ossec 10w REG 253,3 2060995503 201341089 /var/ossec/logs/alerts/alerts.json

java 11924 logstash 93r REG 253,3 2060995503 201341089 /var/ossec/logs/alerts/alerts.json

3. If Logstash is reading our alerts, let’s check if there is an Elasticsearch index for today (wazuh-alerts-3.x-2018.10.16)):

curl localhost:9200/_cat/indices/wazuh–alerts–3.x-*

[root@waz01~]# curl localhost:9200/_cat/indices/wazuh-alerts-3.x-*

yellow open wazuh-alerts-3.x-2018.09.07 HLNDuMjHS1Ox3iLoSwFE7g 5 1 294 0 1000.8kb 1000.8kb

yellow open wazuh-alerts-3.x-2018.09.25 Eg1rvDXbSNSq5EqJAtSm_A 5 1 247998 0 87.7mb 87.7mb

yellow open wazuh-alerts-3.x-2018.09.05 HHRnxqjtTKimmW6FEUUfdw 5 1 143 0 679.6kb 679.6kb

yellow open wazuh-alerts-3.x-2018.09.08 MqIJtCNQR3aU3inuv-pxpw 5 1 183 0 748kb 748kb

yellow open wazuh-alerts-3.x-2018.09.15 GIx8fMXnQ3ukrSkKmjbViQ 5 1 171191 0 45.9mb 45.9mb

yellow open wazuh-alerts-3.x-2018.10.10 W3pw1hDwSp2QAtRm0hwoaQ 5 1 896799 0 662.6mb 662.6mb

yellow open wazuh-alerts-3.x-2018.10.15 rnC7kyXRQSCSXm6wVCiWOw 5 1 2628257 0 1.8gb 1.8gb

yellow open wazuh-alerts-3.x-2018.10.02 nKEdjkFOQ9abitVi_dKF3g 5 1 727934 0 232.7mb 232.7mb

yellow open wazuh-alerts-3.x-2018.09.21 FY0mIXGQQHmCpYgRgOIJhg 5 1 203134 0 63.5mb 63.5mb

yellow open wazuh-alerts-3.x-2018.10.01 mvYSVDZJSfa-F_5dKIBwAg 5 1 402155 0 129.9mb 129.9mb

yellow open wazuh-alerts-3.x-2018.10.18 _2WiGz6fRXSNyDjy8qPefg 5 1 2787147 0 1.8gb 1.8gb

yellow open wazuh-alerts-3.x-2018.09.19 ebb9Jrt1TT6Qm6df7VjZxg 5 1 201897 0 58.3mb 58.3mb

yellow open wazuh-alerts-3.x-2018.09.13 KPy8HfiyRyyPeeHpTGKJNg 5 1 52530 0 13.7mb 13.7mb

yellow open wazuh-alerts-3.x-2018.10.23 T7YJjWhgRMaYyCT-XC1f5w 5 1 1074081 0 742.6mb 742.6mb

yellow open wazuh-alerts-3.x-2018.10.03 bMW_brMeRkSDsJWL6agaWg 5 1 1321895 0 715mb 715mb

yellow open wazuh-alerts-3.x-2018.09.18 B1wJIN1SQKuSQbkoFsTmnA 5 1 187805 0 52.4mb 52.4mb

yellow open wazuh-alerts-3.x-2018.09.04 CvatsnVxTDKgtPzuSkebFQ 5 1 28 0 271.1kb 271.1kb

yellow open wazuh-alerts-3.x-2018.10.21 AWVQ7D8VS_S0DHiXvtNB1Q 5 1 2724453 0 1.8gb 1.8gb

yellow open wazuh-alerts-3.x-2018.09.27 8wRF0XhXQnuVexAxLF6Y5w 5 1 233117 0 79.2mb 79.2mb

yellow open wazuh-alerts-3.x-2018.10.13 wM5hHYMCQsG5XCkIquE-QA 5 1 304830 0 222.4mb 222.4mb

yellow open wazuh-alerts-3.x-2018.09.12 1aB7pIcnTWqZPZkFagHnKA 5 1 73 0 516kb 516kb

yellow open wazuh-alerts-3.x-2018.09.29 BXyZe2eySkSlwutudcTzNA 5 1 222734 0 73.7mb 73.7mb

yellow open wazuh-alerts-3.x-2018.10.04 x8198rpWTxOVBgJ6eTjJJg 5 1 492044 0 364.9mb 364.9mb

yellow open wazuh-alerts-3.x-2018.09.23 ZQZE9KD1R1y6WypYVV5kfg 5 1 216141 0 73.7mb 73.7mb

yellow open wazuh-alerts-3.x-2018.09.22 60AsCkS-RGG0Z2kFGcrbxg 5 1 218077 0 74.2mb 74.2mb

yellow open wazuh-alerts-3.x-2018.10.12 WdiFnzu7QlaBetwzcsIFYQ 5 1 363029 0 237.7mb 237.7mb

yellow open wazuh-alerts-3.x-2018.09.24 Loa8kM7cSJOujjRzvYsVKw 5 1 286140 0 106.3mb 106.3mb

yellow open wazuh-alerts-3.x-2018.09.17 zK3MCinOSF2_3rNAJnuPCQ 5 1 174254 0 48.3mb 48.3mb

yellow open wazuh-alerts-3.x-2018.10.17 A4yCMv4YTuOQWelbb3XQtQ 5 1 2703251 0 1.8gb 1.8gb

yellow open wazuh-alerts-3.x-2018.09.02 lt8xvq2ZRdOQGW7pSX5-wg 5 1 148 0 507kb 507kb

yellow open wazuh-alerts-3.x-2018.08.31 RP0_5r1aQdiMmQYeD0-3CQ 5 1 28 0 247.8kb 247.8kb

yellow open wazuh-alerts-3.x-2018.09.28 iZ2J4UMhR6y1eHH1JiiqLQ 5 1 232290 0 78.6mb 78.6mb

yellow open wazuh-alerts-3.x-2018.09.09 FRELA8dFSWy6aMd12ZFnqw 5 1 428 0 895.1kb 895.1kb

yellow open wazuh-alerts-3.x-2018.09.16 uwLNlaQ1Qnyp2V9jXJJHvA 5 1 171478 0 46.5mb 46.5mb

yellow open wazuh-alerts-3.x-2018.10.14 WQV3dpLeSdapmaKOewUh-Q 5 1 226964 0 154.9mb 154.9mb

yellow open wazuh-alerts-3.x-2018.09.11 2Zc4Fg8lR6G64XuJLZbkBA 5 1 203 0 772.1kb 772.1kb

yellow open wazuh-alerts-3.x-2018.10.16 p2F-trx1R7mBXQUb4eY-Fg 5 1 2655690 0 1.8gb 1.8gb

yellow open wazuh-alerts-3.x-2018.08.29 kAPHZSRpQqaMhoWgkiXupg 5 1 28 0 236.6kb 236.6kb

yellow open wazuh-alerts-3.x-2018.08.28 XmD43PlgTUWaH4DMvZMiqw 5 1 175 0 500.9kb 500.9kb

yellow open wazuh-alerts-3.x-2018.10.19 O4QFPk1FS1urV2CGM2Ul4g 5 1 2718909 0 1.8gb 1.8gb

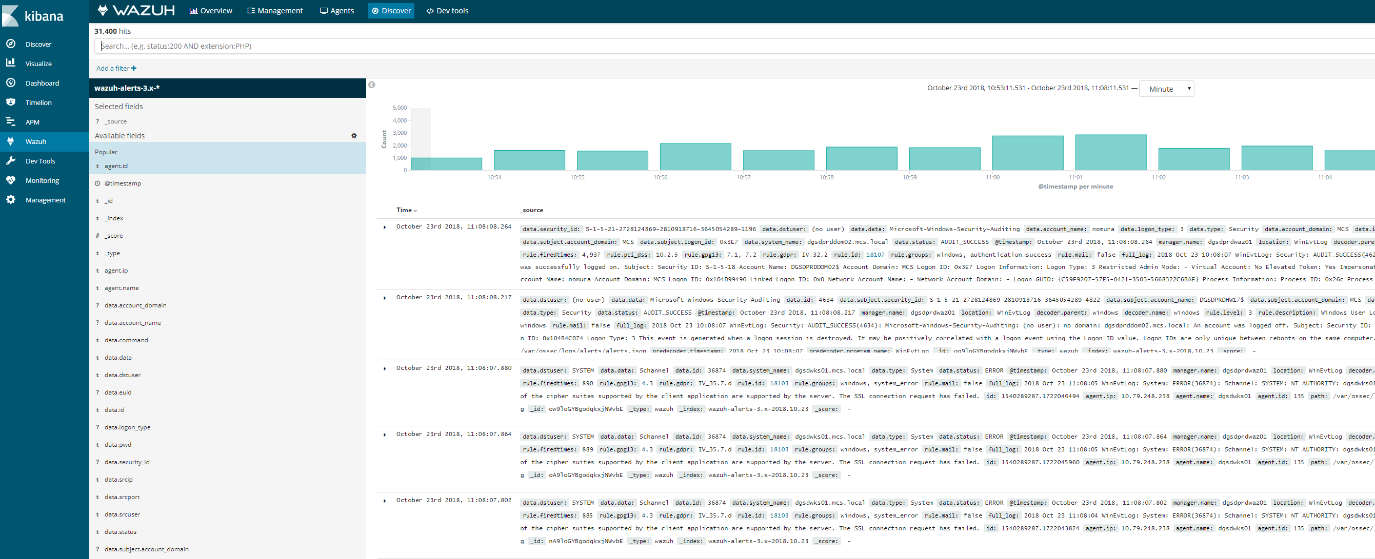

4. If Elasticsearch has an index for today (wazuh-alerts-3.x-2018.10.16), the problem is probably selected time range in Kibana. To discard any error related to this, please go to Kibana > Discover, and look for

alerts in that section of Kibana itself. If there are alerts from today in the Discover section.

This means the Elasticsearch stack is finally working (at least at index level)

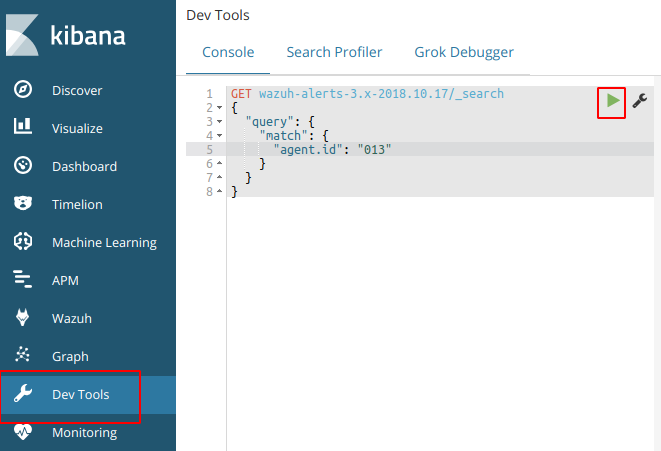

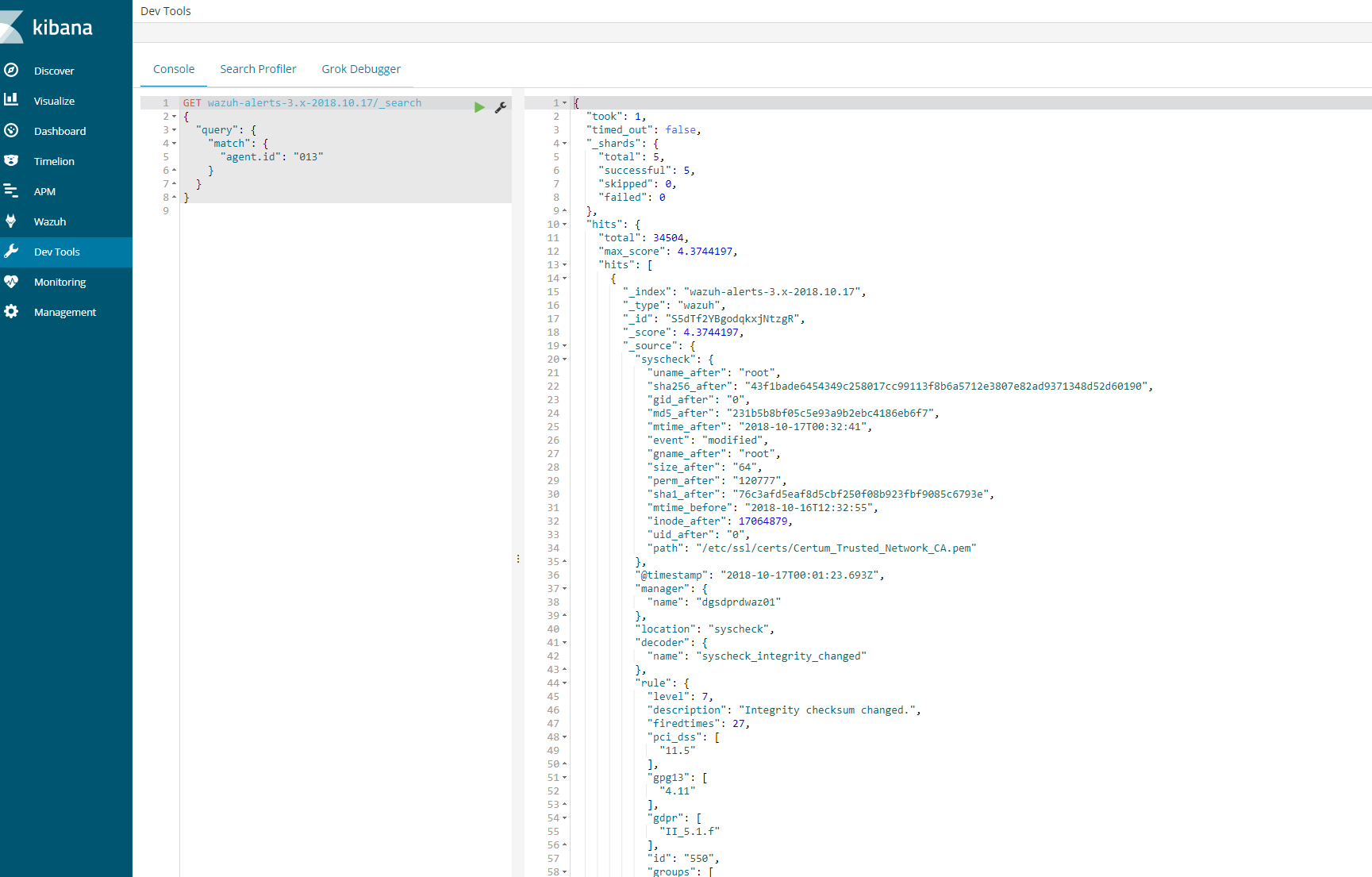

Reason 4: Agent buffer is full due to flood events. If this occurs events are not logged and data is lost. We want to drill down on a specific agent to figure out what is causing the issue.

Try to fetch data directly from Elasticsearch for the today’s index and for the agent 013. Copy and paste the next query in the Kibana dev tools:

GET wazuh–alerts–3.x–2018.10.17/_search{ “query”: { “match”: { “agent.id“: “013” } }}

This should provide a log an output to show that the agent is logged in the indices for that day. If this is successful then we know that the logs are coming and kibana is able to communicate.

Next steps

- Login using SSH into the agent “013” and execute the next command:

wc –l /var/log/audit/audit.log cut –d‘/’ –f1 (centos)

wc –l /var/log/audit/syslog cut –d‘/’ f1(ubuntu)

- root@wazuh-03:/var/log# wc -l /var/log/syslog | cut -d’/’ -f1

- 36451

36451

Also, it would be nice if you provide us your audit rules, let’s check them using the next command:

# auditctl -l

It should show you a positive number, and that number is the number of lines in the audit.log file. Note down it.

- Now restart the Wazuh agent:

# systemctl restart wazuh-agent

We need to wait for syscheck scan is finished, this trick is useful to know exactly when it’s done:

# tail -f /var/ossec/logs/ossec.log | grep syscheck | grep Ending

The above command shouldn’t show anything until the scan is finished (it could take some time, be patient please). At the end, you should see a line like this:

2018/10/17 13:36:03 ossec–syscheckd: INFO: Ending syscheck scan (forwarding database).

Now, it’s time for checking the audit.log file again:

wc –l /var/log/audit/audit.log cut –d‘/’ –f1

wc –l /var/log/audit/syslog cut –d‘/’ f1

If you still see the agent buffer full after these steps then we need to do debugging.

tail -f /var/ossec/logs/ossec.log | grep syscheck | grep Ending

root@waz03:/var/log# cat /var/ossec/logs/ossec.log | grep –i -E “(error|warning|critical)”

2018/10/17 00:09:08 ossec-agentd: WARNING: Agent buffer at 90 %.

2018/10/17 00:09:08 ossec-agentd: WARNING: Agent buffer is full: Events may be lost.

2018/10/17 12:10:20 ossec-agentd: WARNING: Agent buffer at 90 %.

2018/10/17 12:10:20 ossec-agentd: WARNING: Agent buffer is full: Events may be lost.

2018/10/17 14:25:20 ossec-logcollector: ERROR: (1103): Could not open file ‘/var/log/messages’ due to [(2)-(No such file or directory)].

2018/10/17 14:25:20 ossec-logcollector: ERROR: (1103): Could not open file ‘/var/log/secure’ due to [(2)-(No such file or directory)].

2018/10/17 14:26:08 ossec-agentd: WARNING: Agent buffer at 90 %.

2018/10/17 14:26:08 ossec-agentd: WARNING: Agent buffer is full: Events may be lost.

2018/10/17 14:28:18 ossec-logcollector: ERROR: (1103): Could not open file ‘/var/log/messages’ due to [(2)-(No such file or directory)].

2018/10/17 14:28:18 ossec-logcollector: ERROR: (1103): Could not open file ‘/var/log/secure’ due to [(2)-(No such file or directory)].

2018/10/17 14:29:06 ossec-agentd: WARNING: Agent buffer at 90 %.

2018/10/17 14:29:06 ossec-agentd: WARNING: Agent buffer is full: Events may be lost.

Debugging json alerts for specific agent 13

Ok, let’s debug your agent events using logall_json in the Wazuh manager instance.

Login using SSH into the Wazuh manager instance and edit the ossec.conf file.

- Edit the file /var/ossec/etc/ossec.conf and look for the <global> section, then enable <logall_json>

<logall_json>yes</logall_json>

2. Restart the Wazuh manager

# systemctl restart wazuh-manager

3. Login using SSH into the Wazuhagent(13) instance, restart it and tail -f until it shows you the warning message:

# systemctl restart wazuh-agent# tail -f /var/ossec/logs/ossec.log | grep WARNING

4. Once you see ossec-agentd: WARNING: Agent buffer at 90 %. in the Wazuh agent logs,

then switch your CLI to the Wazuh manager instance again and

the next file we want to tail is from your Wazuh manager:

tail –f /var/ossec/logs/archives/archives.json

5. Now we can take a look into events in order to clarify what is flooding the agent “013”.

Once you have the log is seen, you can disable logall_json and restart the Wazuh manager.

6.

Log from tail –f /var/ossec/logs/archives/archives.json (wazuh-manager)

{“timestamp”:”2018-10-17T18:06:17.33+0100″,”rule”:{“level”:7,”description”:”Host-based anomaly detection event (rootcheck).”,”id”:”510″,”firedtimes”:3352,”mail”:false,”groups”:[“ossec”,”rootcheck”],”gdpr”:[“IV_35.7.d”]},”agent”:{“id”:”013″,”na

me”:”waz03“,”ip”:”10.79.244.143″},”manager”:{“name”:”waz01“},”id”:”1539795977.2752038221″,“full_log”:”File ‘/var/lib/kubelet/pods/2ff462ce-7233-11e8-8282-005056b518e6/containers/install-cni/e26aa5b1’ is owned by root and has written permissions to anyone.”,”decoder“:{“name”:”rootcheck“},”data”:{“title”:”File is owned by root and has written permissions to anyone.”,”file”:”/var/lib/kubelet/pods/2ff462ce-7233-11e8-8282-005056b518e6/containers/install-cni/e26aa5b1″},”location”:”rootcheck”}

{“timestamp”:”2018-10-17T18:06:17.35+0100″,”rule”:{“level”:7,”description”:”Host-based anomaly detection event (rootcheck).”,”id”:”510″,”firedtimes”:3353,”mail”:false,”groups”:[“ossec”,”rootcheck”],”gdpr”:[“IV_35.7.d”]},”agent”:{“id”:”013″,”name”:”waz03“,”ip”:”10.79.244.143″},”manager”:{“name”:”waz01“},”id”:”1539795977.2752038739″,”full_log”:”File ‘/var/lib/kubelet/pods/2ff462ce-7233-11e8-8282-005056b518e6/containers/install-cni/12cb9011’ is owned by root and has written permissions to anyone.”,”decoder“:{“name”:”rootcheck“},”data”:{“title”:”File is owned by root and has written permissions to anyone.”,”file”:”/var/lib/kubelet/pods/2ff462ce-7233-11e8-8282-005056b518e6/containers/install-cni/12cb9011″},”location”:”rootcheck”}

{“timestamp”:”2018-10-17T18:06:17.37+0100″,”rule”:{“level”:7,”description”:”Host-based anomaly detection event (rootcheck).”,”id”:”510″,”firedtimes”:3354,”mail”:false,”groups”:[“ossec”,”rootcheck”],”gdpr”:[“IV_35.7.d”]},”agent”:{“id”:”013″,”name”:”waz03“,”ip”:”10.79.244.143″},”manager”:{“name”:”waz01“},”id”:”1539795977.2752039257″,”full_log”:”File ‘/var/lib/kubelet/pods/2ff462ce-7233-11e8-8282-005056b518e6/containers/install-cni/4a930107’ is owned by root and has written permissions to anyone.”,”decoder“:{“name”:”rootcheck“},”data”:{“title”:”File is owned by root and has written permissions to anyone.”,”file”:”/var/lib/kubelet/pods/2ff462ce-7233-11e8-8282-005056b518e6/containers/install-cni/4a930107″},”location”:”rootcheck”}

{“timestamp”:”2018-10-17T18:06:17.40+0100″,”rule“:{“level”:7,”description”:”Host-based anomaly detection event

From the above log we can see that kubernetes is sending a lot of events to the agent causing the buffer to fill up. To solve this we particular issue from happening in future. We can disable this at the client level or the global level.

Here you can see the number of events from rootcheck in your archives.json:

cat archives.json grep rootcheck wc –l489

Here you can see the number of events from rootcheck and rule 510 in thearchives.json:

cat archives.json grep rootcheck grep 510 wc –l489

Here you can see the number of events from rootcheck and rule 510 and including “/var/lib/kubelet/pods/“ in your archives.json:

cat archives.json grep rootcheck grep 510 grep /var/lib/kubelet/pods/ wc –l489

So we have two options:

Option 1. Edit the ossec.conf from your Wazuh agent “013”. (This is the one I did)

– Login using SSH into the Wazuh agent “013” instance.

– Edit the file /var/ossec/etc/ossec.conf, and look for the rootcheck block, then put a <ignore> block for that directory.

<rootcheck>…<ignore>/var/lib/kubelet</ignore>…</rootcheck>

Restart the Wazuh agent “013”

# systemctl restart wazuh-agent

Option 2. Check in which group is your agent and edit its centralized configuration.

– Login using SSH into the Wazuh manager instance.

– Check the group where is agent “013”

# /var/ossec/bin/agent_groups -s –i 013

– Note down the group, example: default

– Edit the file under /var/ossec/etc/shared/default/agent.conf (replace default by the real group name, it could be different from my example),

then add the rootcheck ignore inside the <agent_config> block, example:

<agent_config> <!– Shared agent configuration here –> <rootcheck> <ignore>/var/lib/kuberlet</ignore> </rootcheck></agent_config>

– Restart the Wazuh manager

# systemctl restart wazuh-manager

– Restart the agent on client as well

# systemctl restart wazuh–agent

The solution #1 takes effect immediately.

The solution #2 will push the new configuration from the Wazuh manager to the Wazuh agent, once the agent receives it,

it auto restarts itself automatically and then it applies the new configuration. It could take a bit more time than solution #1.

On a side note, you can take a look at this useful link about the agent flooding:

The above link talks about how to prevent from being flooded.

Now the agent should show correctly in the 15min time range. If a bunch of client had the issue then you need to use ansible to send out a agent restart on all clients or setup a cron on all the machines to restart the agent every 24 hours.

Discover on the agent should also show logs

Ansible adhoc command or playbook.

Example:

- ansible –i hosts–linuxdevelopment -a “sudo systemctl restart wazuh-agent” –vault-password-file /etc/ansible/vaultpw.txt -u ansible_nickt -k -K

How to deploy wazuh-agent with Ansible

Note: For windows ports 5986 and 1515 must be open along with configureansiblescript.ps(powershell script) must have been setup for ansible to be able to communicate and deploy the wazuh-agent to windows machines.

In order to deploy the wazuh-agent to a large group of servers that span windows, ubuntu, centos type distros with ansible. Some tweaks need to be made on the wazuh manager and ansible server

This is done on the wazuh-manager server

/var/ossec/etc/ossec.conf – inside this file the following need to be edited for registrations to have the proper ip of the hosts being registered

<auth>

<disabled>no</disabled>

<port>1515</port>

<use_source_ip>yes</use_source_ip>

<force_insert>yes</force_insert>

<force_time>0</force_time>

<purge>yes</purge>

<use_password>yes</use_password>

<limit_maxagents>no</limit_maxagents>

<ciphers>HIGH:!ADH:!EXP:!MD5:RC4:3DES:!CAMELLIA:@STRENGTH</ciphers>

<!– <ssl_agent_ca></ssl_agent_ca> –>

<ssl_verify_host>no</ssl_verify_host>

<ssl_manager_cert>/var/ossec/etc/sslmanager.cert</ssl_manager_cert>

<ssl_manager_key>/var/ossec/etc/sslmanager.key</ssl_manager_key>

<ssl_auto_negotiate>yes</ssl_auto_negotiate>

</auth>

To enable authd on wazuh-manager

Now on your ansible server

wazuh_managers:

– address: 10.79.240.160

port: 1514

protocol: tcp

api_port: 55000

api_proto: ‘http’

api_user: null

wazuh_profile: null

wazuh_auto_restart: ‘yes’

wazuh_agent_authd:

enable: true

port: 1515

Next section in main.yml

openscap:

disable: ‘no’

timeout: 1800

interval: ‘1d’

scan_on_start: ‘yes’

# We recommend the use of Ansible Vault to protect Wazuh, api, agentless and authd credentials.

authd_pass: ‘password’

Test communication to windows machines via ansible run the following from /etc/ansible

How to run he playbook on linux machines, run from /etc/ansible/playbook/

How to run playbook on windows

Ansible playbook-roles-tasks breakdown

:/etc/ansible/playbooks# cat wazuh-agent.ymlplaybook file

– hosts: all:!wazuh-manager

roles:

– ansible-wazuh-agentroles that is called

vars:

wazuh_managers:

– address: 192.168.10.10

port: 1514

protocol: udp

api_port: 55000

api_proto: ‘http’

api_user: ansible

wazuh_agent_authd:

enable: true

port: 1515

ssl_agent_ca: null

ssl_auto_negotiate: ‘no

Roles: ansible-wazuh-agent

:/etc/ansible/roles/ansible-wazuh-agent/tasks# cat Linux.yml

—

– import_tasks: “RedHat.yml”

when: ansible_os_family == “RedHat”

– import_tasks: “Debian.yml”

when: ansible_os_family == “Debian”

– name: Linux | Install wazuh-agent

become: yes

package: name=wazuh-agent state=present

async: 90

poll: 15

tags:

– init

– name: Linux | Check if client.keys exists

become: yes

stat: path=/var/ossec/etc/client.keys

register: check_keys

tags:

– config

This task I added. If the client.keys file exists the registration on linux simply skips over when the playbook runs. You may want to disable this later, however when deploying to new machines probably best to have it active

– name: empty client key file

become: yes

command: rm -f /var/ossec/etc/client.keys

command: touch /var/ossec/etc/client.keys

– name: Linux | Agent registration via authd

block:

– name: Retrieving authd Credentials

include_vars: authd_pass.yml

tags:

– config

– authd

– name: Copy CA, SSL key and cert for authd

copy:

src: “{{ item }}”

dest: “/var/ossec/etc/{{ item | basename }}”

mode: 0644

with_items:

– “{{ wazuh_agent_authd.ssl_agent_ca }}”

– “{{ wazuh_agent_authd.ssl_agent_cert }}”

– “{{ wazuh_agent_authd.ssl_agent_key }}”

tags:

– config

– authd

when:

– wazuh_agent_authd.ssl_agent_ca is not none

This section below is the most important section as this what registers the machine to wazuh, if this section is skipped its usually due to client.keys file. I have made adjustments from the original git repository as I found it had some issues.

– name: Linux | Register agent (via authd)

shell: >

/var/ossec/bin/agent-auth

-m {{ wazuh_managers.0.address }}

-p {{ wazuh_agent_authd.port }}

{% if authd_pass is defined %}-P {{ authd_pass }}{% endif %}

{% if wazuh_agent_authd.ssl_agent_ca is not none %}

-v “/var/ossec/etc/{{ wazuh_agent_authd.ssl_agent_ca | basename }}”

-x “/var/ossec/etc/{{ wazuh_agent_authd.ssl_agent_cert | basename }}”

-k “/var/ossec/etc/{{ wazuh_agent_authd.ssl_agent_key | basename }}”

{% endif %}

{% if wazuh_agent_authd.ssl_auto_negotiate == ‘yes’ %}-a{% endif %}

become: yes

register: agent_auth_output

when:

– check_keys.stat.size == 0

– wazuh_managers.0.address is not none

tags:

– config

– authd

– name: Linux | Verify agent registration

shell: echo {{ agent_auth_output }} | grep “Valid key created”

when:

– check_keys.stat.size == 0

– wazuh_managers.0.address is not none

tags:

– config

– authd

when: wazuh_agent_authd.enable == true

– name: Linux | Agent registration via rest-API

block:

– name: Retrieving rest-API Credentials

include_vars: api_pass.yml

tags:

– config

– api

– name: Linux | Create the agent key via rest-API

uri:

url: “{{ wazuh_managers.0.api_proto }}://{{ wazuh_managers.0.address }}:{{ wazuh_managers.0.api_port }}/agents/”

validate_certs: no

method: POST

body: {“name”:”{{ inventory_hostname }}”}

body_format: json

status_code: 200

headers:

Content-Type: “application/json”

user: “{{ wazuh_managers.0.api_user }}”

password: “{{ api_pass }}”

register: newagent_api

changed_when: newagent_api.json.error == 0

when:

– check_keys.stat.size == 0

– wazuh_managers.0.address is not none

become: no

tags:

– config

– api

– name: Linux | Retieve new agent data via rest-API

uri:

url: “{{ wazuh_managers.0.api_proto }}://{{ wazuh_managers.0.address }}:{{ wazuh_managers.0.api_port }}/agents/{{ newagent_api.json.data.id }}”

validate_certs: no

method: GET

return_content: yes

user: “{{ wazuh_managers.0.api_user }}”

password: “{{ api_pass }}”

when:

– check_keys.stat.size == 0

– wazuh_managers.0.address is not none

– newagent_api.json.error == 0

register: newagentdata_api

delegate_to: localhost

become: no

tags:

– config

– api

– name: Linux | Register agent (via rest-API)

command: /var/ossec/bin/manage_agents

environment:

OSSEC_ACTION: i

OSSEC_AGENT_NAME: ‘{{ newagentdata_api.json.data.name }}’

OSSEC_AGENT_IP: ‘{{ newagentdata_api.json.data.ip }}’

OSSEC_AGENT_ID: ‘{{ newagent_api.json.data.id }}’

OSSEC_AGENT_KEY: ‘{{ newagent_api.json.data.key }}’

OSSEC_ACTION_CONFIRMED: y

register: manage_agents_output

when:

– check_keys.stat.size == 0

– wazuh_managers.0.address is not none

– newagent_api.changed

tags:

– config

– api

notify: restart wazuh-agent

when: wazuh_agent_authd.enable == false

– name: Linux | Vuls integration deploy (runs in background, can take a while)

command: /var/ossec/wodles/vuls/deploy_vuls.sh {{ ansible_distribution|lower }} {{ ansible_distribution_major_version|int }}

args:

creates: /var/ossec/wodles/vuls/config.toml

async: 3600

poll: 0

when:

– wazuh_agent_config.vuls.disable != ‘yes’

– ansible_distribution == ‘Redhat’ or ansible_distribution == ‘CentOS’ or ansible_distribution == ‘Ubuntu’ or ansible_distribution == ‘Debian’ or ansible_distribution == ‘Oracle’

tags:

– init

– name: Linux | Installing agent configuration (ossec.conf)

become: yes

template: src=var-ossec-etc-ossec-agent.conf.j2

dest=/var/ossec/etc/ossec.conf

owner=root

group=ossec

mode=0644

notify: restart wazuh-agent

tags:

– init

– config

– name: Linux | Ensure Wazuh Agent service is restarted and enabled

become: yes

service:

name: wazuh-agent

enabled: yes

state: restarted

– import_tasks: “RMRedHat.yml”

when: ansible_os_family == “RedHat”

– import_tasks: “RMDebian.yml”

when: ansible_os_family == “Debian”

Windows- tasks

Note: This section only works if your ansible is configured to communicate with Windows machines. It requires that port 5986 from ansible to windows is open and then port 1515 from the window machine to the wazuh-manager is open.

Problems: When using authd and Kerberos for windows ensure you have the host name listed in /etc/hosts on the ansible server to help alleviate agent deployment issues. Its script does not seem to handle well when you have more than 5 or 6 clients at a time at least in my experience.

Either I had to rejoint the windows machine to the domain or remove the client.keys file. I have updated this task to include the task to remove the client.keys file before it check to see if it exists. You do need to play with it a bit sometimes. I have also added a section that adds the wazuh-agent as a service and restarts it upon deployment as I found it sometimes skipped this entirely.

:/etc/ansible/roles/ansible-wazuh-agent/tasks# cat Windows.yml

—

– name: Windows | Get current installed version

win_shell: “{{ wazuh_winagent_config.install_dir }}ossec-agent.exe -h”

args:

removes: “{{ wazuh_winagent_config.install_dir }}ossec-agent.exe”

register: agent_version

failed_when: False

changed_when: False

– name: Windows | Check Wazuh agent version installed

set_fact: correct_version=true

when:

– agent_version.stdout is defined

– wazuh_winagent_config.version in agent_version.stdout

– name: Windows | Downloading windows Wazuh agent installer

win_get_url:

dest: C:\wazuh-agent-installer.msi

url: “{{ wazuh_winagent_config.repo }}wazuh-agent-{{ wazuh_winagent_config.version }}-{{ wazuh_winagent_config.revision }}.msi”

when:

– correct_version is not defined

– name: Windows | Verify the downloaded Wazuh agent installer

win_stat:

path: C:\wazuh-agent-installer.msi

get_checksum: yes

checksum_algorithm: md5

register: installer_md5

when:

– correct_version is not defined

failed_when:

– installer_md5.stat.checksum != wazuh_winagent_config.md5

– name: Windows | Install Wazuh agent

win_package:

path: C:\wazuh-agent-installer.msi

arguments: APPLICATIONFOLDER={{ wazuh_winagent_config.install_dir }}

when:

– correct_version is not defined

This section was added. If it was present registrations would skip

– name: Remove a file, if present

win_file:

path: C:\wazuh-agent\client.keys

state: absent

This section was added for troubleshooting purposes

#- name: Touch a file (creates if not present, updates modification time if present)

# win_file:

# path: C:\wazuh-agent\client.keys

# state: touch

– name: Windows | Check if client.keys exists

win_stat: path=”{{ wazuh_winagent_config.install_dir }}client.keys”

register: check_windows_key

notify: restart wazuh-agent windows

tags:

– config

– name: Retrieving authd Credentials

include_vars: authd_pass.yml

tags:

– config

– name: Windows | Register agent

win_shell: >

{{ wazuh_winagent_config.install_dir }}agent-auth.exe

-m {{ wazuh_managers.0.address }}

-p {{ wazuh_agent_authd.port }}

{% if authd_pass is defined %}-P {{ authd_pass }}{% endif %}

args:

chdir: “{{ wazuh_winagent_config.install_dir }}”

register: agent_auth_output

notify: restart wazuh-agent windows

when:

– wazuh_agent_authd.enable == true

– check_windows_key.stat.exists == false

– wazuh_managers.0.address is not none

tags:

– config

– name: Windows | Installing agent configuration (ossec.conf)

win_template:

src: var-ossec-etc-ossec-agent.conf.j2

dest: “{{ wazuh_winagent_config.install_dir }}ossec.conf”

notify: restart wazuh-agent windows

tags:

– config

– name: Windows | Delete downloaded Wazuh agent installer file

win_file:

path: C:\wazuh-agent-installer.msi

state: absent

These section was added as the service sometimes was not created and the agent was not restarted upon deployment which resulted in a non active client In kibana

– name: Create a new service

win_service:

name: wazuh-agent

path: C:\wazuh-agent\ossec-agent.exe

– name: Windows | Wazuh-agent Restart

win_service:

name: wazuh-agent

state: restarted

How to deploy Wazuh

Adding the Wazuh repository

The first step to setting up Wazuh is to add the Wazuh repository to your server. If you want to download the wazuh-manager package directly, or check the compatible versions, click here.

To set up the repository, run this command:

# cat > /etc/yum.repos.d/wazuh.repo <<\EOF

[wazuh_repo]

gpgcheck=1

gpgkey=https://packages.wazuh.com/key/GPG-KEY-WAZUH

enabled=1

name=Wazuh repository

baseurl=https://packages.wazuh.com/3.x/yum/

protect=1

EOF

For CentOS-5 and RHEL-5:

# cat > /etc/yum.repos.d/wazuh.repo <<\EOF

[wazuh_repo]

gpgcheck=1

gpgkey=http://packages.wazuh.com/key/GPG-KEY-WAZUH-5

enabled=1

name=Wazuh repository

baseurl=http://packages.wazuh.com/3.x/yum/5/$basearch/

protect=1

EOF

Installing the Wazuh Manager

The next step is to install the Wazuh Manager on your system:

# yum install wazuh-manager

Once the process is complete, you can check the service status with:

- For Systemd:

# systemctl status wazuh-manager

- For SysV Init:

# service wazuh-manager status

Installing the Wazuh API

- NodeJS >= 4.6.1 is required in order to run the Wazuh API. If you do not have NodeJS installed or your version is older than 4.6.1, we recommend that you add the official NodeJS repository like this:

# curl –silent –location https://rpm.nodesource.com/setup_8.x | bash –

and then, install NodeJS:

# yum install nodejs

- Python >= 2.7 is required in order to run the Wazuh API. It is installed by default or included in the official repositories in most Linux distributions.

To determine if the python version on your system is lower than 2.7, you can run the following:

# python –version

It is possible to set a custom Python path for the API in “/var/ossec/api/configuration/config.js“, in case the stock version of Python in your distro is too old:

config.python = [

// Default installation

{

bin: “python”,

lib: “”

},

// Package ‘python27’ for CentOS 6

{

bin: “/opt/rh/python27/root/usr/bin/python”,

lib: “/opt/rh/python27/root/usr/lib64”

}

];

CentOS 6 and Red Hat 6 come with Python 2.6, however, you can install Python 2.7 in parallel to maintain the older version(s):

- For CentOS 6:

# yum install -y centos-release-scl

# yum install -y python27

- For RHEL 6:

# yum install python27

You may need to first enable a repository in order to get python27, with a command like this:

# yum-config-manager –enable rhui-REGION-rhel-server-rhscl

# yum-config-manager –enable rhel-server-rhscl-6-rpms

- Install the Wazuh API. It will update NodeJS if it is required:

# yum install wazuh-api

- Once the process is complete, you can check the service status with:

- For Systemd:

# systemctl status wazuh-api

- For SysV Init:

# service wazuh-api status

Installing Filebeat

Filebeat is the tool on the Wazuh server that securely forwards alerts and archived events to the Logstash service on the Elastic Stack server(s).

Warning

In a single-host architecture (where Wazuh server and Elastic Stack are installed in the same system), the installation of Filebeat is not needed since Logstash will be able to read the event/alert data directly from the local filesystem without the assistance of a forwarder.

The RPM package is suitable for installation on Red Hat, CentOS and other modern RPM-based systems.

- Install the GPG keys from Elastic and then the Elastic repository:

# rpm –import https://packages.elastic.co/GPG-KEY-elasticsearch

# cat > /etc/yum.repos.d/elastic.repo << EOF

[elasticsearch-6.x]

name=Elasticsearch repository for 6.x packages

baseurl=https://artifacts.elastic.co/packages/6.x/yum

gpgcheck=1

gpgkey=https://artifacts.elastic.co/GPG-KEY-elasticsearch

enabled=1

autorefresh=1

type=rpm-md

EOF

- Install Filebeat Note: If you are doing an all in one setup do not install filebeat

# yum install filebeat-6.4.2

- Download the Filebeat configuration file from the Wazuh repository. This is pre-configured to forward Wazuh alerts to Logstash:

# curl -so /etc/filebeat/filebeat.yml https://raw.githubusercontent.com/wazuh/wazuh/3.6/extensions/filebeat/filebeat.yml

- Edit the file /etc/filebeat/filebeat.ymland replace ELASTIC_SERVER_IP with the IP address or the hostname of the Elastic Stack server. For example:

output:

logstash:

hosts: [“ELASTIC_SERVER_IP:5000”]

- Enable and start the Filebeat service:

- For Systemd:

# systemctl daemon-reload

# systemctl enable filebeat.service

# systemctl start filebeat.service

- For SysV Init:

# chkconfig –add filebeat

# service filebeat start

Next steps

Once you have installed the manager, API and Filebeat (only needed for distributed architectures), you are ready to install

Installing Elastic Stack

This guide describes the installation of an Elastic Stack server comprised of Logstash, Elasticsearch, and Kibana. We will illustrate package-based installations of these components. You can also install them from binary tarballs, however, this is not preferred or supported under Wazuh documentation.

In addition to Elastic Stack components, you will also find the instructions to install and configure the Wazuh app (deployed as a Kibana plugin).

Depending on your operating system you can choose to install Elastic Stack from RPM or DEB packages. Consult the table below and choose how to proceed:

Install Elastic Stack with RPM packages

The RPM packages are suitable for installation on Red Hat, CentOS and other RPM-based systems.

Note

Many of the commands described below need to be executed with root user privileges.

Preparation

- Oracle Java JRE 8 is required by Logstash and Elasticsearch.

Note

The following command accepts the necessary cookies to download Oracle Java JRE. Please, visit Oracle Java 8 JRE Download Page for more information.

# curl -Lo jre-8-linux-x64.rpm –header “Cookie: oraclelicense=accept-securebackup-cookie” “https://download.oracle.com/otn-pub/java/jdk/8u191-b12/2787e4a523244c269598db4e85c51e0c/jre-8u191-linux-x64.rpm”

Now, check if the package was download successfully:

# rpm -qlp jre-8-linux-x64.rpm > /dev/null 2>&1 && echo “Java package downloaded successfully” || echo “Java package did not download successfully”

Finally, install the RPM package using yum:

# yum -y install jre-8-linux-x64.rpm# rm -f jre-8-linux-x64.rpm

- Install the Elastic repository and its GPG key:

# rpm –import https://packages.elastic.co/GPG-KEY-elasticsearch # cat > /etc/yum.repos.d/elastic.repo << EOF[elasticsearch-6.x]name=Elasticsearch repository for 6.x packagesbaseurl=https://artifacts.elastic.co/packages/6.x/yumgpgcheck=1gpgkey=https://artifacts.elastic.co/GPG-KEY-elasticsearchenabled=1autorefresh=1type=rpm-mdEOF

Elasticsearch

Elasticsearch is a highly scalable full-text search and analytics engine. For more information, please see Elasticsearch.

- Install the Elasticsearch package:

# yum install elasticsearch-6.4.2

- Enable and start the Elasticsearch service:

- For Systemd:

# systemctl daemon-reload# systemctl enable elasticsearch.service# systemctl start elasticsearch.service

- For SysV Init:

# chkconfig –add elasticsearch# service elasticsearch start

It’s important to wait until the Elasticsearch server finishes starting. Check the current status with the following command, which should give you a response like the shown below:

# curl “localhost:9200/?pretty” { “name” : “Zr2Shu_”, “cluster_name” : “elasticsearch”, “cluster_uuid” : “M-W_RznZRA-CXykh_oJsCQ”, “version” : { “number” : “6.4.2”, “build_flavor” : “default”, “build_type” : “rpm”, “build_hash” : “053779d”, “build_date” : “2018-07-20T05:20:23.451332Z”, “build_snapshot” : false, “lucene_version” : “7.3.1”, “minimum_wire_compatibility_version” : “5.6.0”, “minimum_index_compatibility_version” : “5.0.0” }, “tagline” : “You Know, for Search”}

- Load the Wazuh template for Elasticsearch:

# curl https://raw.githubusercontent.com/wazuh/wazuh/3.6/extensions/elasticsearch/wazuh-elastic6-template-alerts.json | curl -XPUT ‘http://localhost:9200/_template/wazuh’ -H ‘Content-Type: application/json’ -d @-

Note

It is recommended that the default configuration be edited to improve the performance of Elasticsearch. To do so, please see Elasticsearch tuning.

Logstash

Logstash is the tool that collects, parses, and forwards data to Elasticsearch for indexing and storage of all logs generated by the Wazuh server. For more information, please see Logstash.

- Install the Logstash package:

# yum install logstash-6.4.2

- Download the Wazuh configuration file for Logstash:

- Local configuration (only in a single-host architecture):

- # curl -so /etc/logstash/conf.d/01-wazuh.conf https://raw.githubusercontent.com/wazuh/wazuh/3.6/extensions/logstash/01-wazuh-local.conf

Because the Logstash user needs to read the alerts.json file, please add it to OSSEC group by running:

# usermod -a -G ossec logstash

- Remote configuration (only in a distributed architecture):

- # curl -so /etc/logstash/conf.d/01-wazuh.conf https://raw.githubusercontent.com/wazuh/wazuh/3.6/extensions/logstash/01-wazuh-remote.conf

Note

Follow the next steps if you use CentOS-6/RHEL-6 or Amazon AMI (logstash uses Upstart like a service manager and needs to be fixed, see this bug):

- Edit the file /etc/logstash/startup.options changing line 30 from LS_GROUP=logstashto LS_GROUP=ossec.

- Update the service with the new parameters by running the command /usr/share/logstash/bin/system-install

- Restart Logstash.

- Enable and start the Logstash service:

- For Systemd:

# systemctl daemon-reload

# systemctl enable logstash.service

# systemctl start logstash.service

- For SysV Init:

# chkconfig –add logstash

# service logstash start

Note

If you are running the Wazuh server and the Elastic Stack server on separate systems (distributed architecture), it is important to configure encryption between Filebeat and Logstash. To do so, please see Setting up SSL for Filebeat and Logstash.

Kibana

Kibana is a flexible and intuitive web interface for mining and visualizing the events and archives stored in Elasticsearch. Find more information at Kibana.

- Install the Kibana package:

# yum install kibana-6.4.2

- Install the Wazuh app plugin for Kibana:

- Increase the default Node.js heap memory limit to prevent out of memory errors when installing the Wazuh app. Set the limit as follows:

# export NODE_OPTIONS=“–max-old-space-size=3072”

- Install the Wazuh app:

# sudo -u kibana /usr/share/kibana/bin/kibana-plugin install https://packages.wazuh.com/wazuhapp/wazuhapp-3.6.1_6.4.2.zip

Warning

The Kibana plugin installation process may take several minutes. Please wait patiently.

Note

If you want to download a different Wazuh app plugin for another version of Wazuh or Elastic Stack, check the table available at GitHub and use the appropriate installation command.

- Kibana will only listen on the loopback interface (localhost) by default. To set up Kibana to listen on all interfaces, edit the file /etc/kibana/kibana.yml uncommenting the setting server.host. Change the value to:

server.host: “0.0.0.0”

Note

It is recommended that an Nginx proxy be set up for Kibana in order to use SSL encryption and to enable authentication. Instructions to set up the proxy can be found at Setting up SSL and authentication for Kibana.

- Enable and start the Kibana service:

- For Systemd:

# systemctl daemon-reload

# systemctl enable kibana.service

# systemctl start kibana.service

- For SysV Init:

# chkconfig –add kibana

# service kibana start

- Disable the Elasticsearch repository:

It is recommended that the Elasticsearch repository be disabled in order to prevent an upgrade to a newer Elastic Stack version due to the possibility of undoing changes with the App. To do this, use the following command:

# sed -i “s/^enabled=1/enabled=0/” /etc/yum.repos.d/elastic.repo

Setup password for wazuh-manager

Securing the Wazuh API

By default, the communications between the Wazuh Kibana App and the Wazuh API are not encrypted. You should take the following actions to secure the Wazuh API.

- Change default credentials:

By default you can access by typing user “foo” and password “bar”. We recommend you to generate new credentials. This can be done very easily, with the following steps:

$ cd /var/ossec/api/configuration/auth $ sudo node htpasswd -c user myUserName

- Enable HTTPS:

In order to enable HTTPS you need to generate or provide a certificate. You can learn how to generate your own certificate or generate it automatically using the script/var/ossec/api/scripts/configure_api.sh.

- Bind to localhost:

In case you do not need to acces to the API externally, you should bind the API tolocalhostusing the optionconfig.hostplaced in the configuration file/var/ossec/api/configuration/config.js.

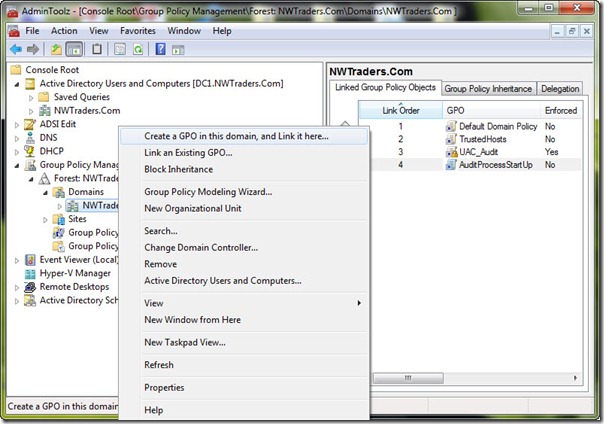

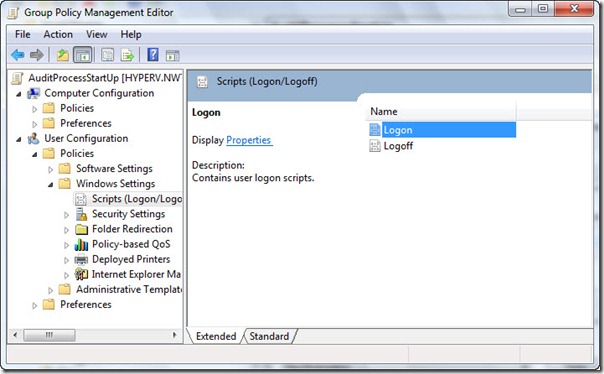

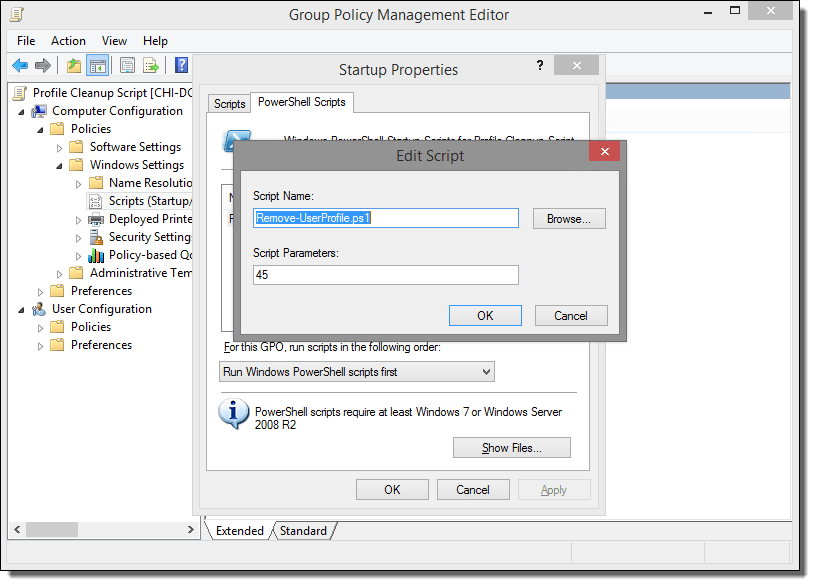

How to deploy ansibleconfigure powershell script on windows

Okay fun stuff, so I tried this a number of ways which I will describe in this blog post.

So if your windows server is joined to the domain and you have a machine that can reach all he virtual machines, WinRM is configured, and you have powershell 3.0 or higher setup.

Then you could try the following powershell for loop from SYSVOL share

Sample powershell For Loop

powershell loop deploy – ask credentials

$serverfiles=import-CSV ‘d:\scripts\hosts.csv’

$cred = get-credential

Foreach ($server in $serverfiles) {

write-output $server.names

invoke-command -computername $server.names -filepath d:\scripts\ansibleconfigure.ps1 -credential $cred

}

Note: This method sucked and failed for me due to WinRM not being there and other restrictions like host having. The other was I’m not exactly powershell intermediate had to muddle around a lot.

What you want to do here is copy the configure script to SYSVOL so all the joined machines can reach the script.

In the search bar type: (replace domain to match)

script name

How to diagnose a kernel panic caused by a killed process

You should install atop on your server as this is top on steroids and can help diagnose all kinds of server issues such as.

https://lwn.net/Articles/387202/ – Atop usage

- CPU load

- IO load

- Memory usage

- Process utilization of resources

- Paging/swaping

- etc…

- How you install atop on ubuntu/debian

- ‘apt-get install atop’

- Then you want to start the atop logging

- ‘/etc/init.d/atop start

- ‘/etc/init.d/atop start

Note : by default the atop logs every 10mins

Now lets say you console your VM or blade server. You see a message that states the server killed a process or ran out of memory or something.

Example:

- Out of memory: Kill process 11970 (php) score 80 or sacrifice child

Killed process 11970 (php) total-vm:1957108kB

When you reboot the server you will want to find out exactly how it happened. How you do this is by checking the kernel log. Now if you have kdump installed you can use that to get a dump of the kernel log and if not you can do this.

- dmesg | egrep -i ‘killed process’

- this will provide a log as indicated below

Kernel log

- [Wed July 10 13:27:30 2018] Out of memory: Kill process 11970 (php) score 80 or sacrifice child]

- [Wed July 10 13:27:30 2018] Killed process 11970 (php) total-vm:123412108kB, anon-rss:1213410764kB, file-rss:2420k]

Now once you have this log you can see the time stamp of when it occurred and you can use atop logs to drill down and find the process id, and see if you can see which daemon and or script caused the issue.

From the log ‘July 10 13:27:30 2018’ we can see the time stamp. Inside /var/log/atop you can do the following.

Run the following:

- ‘atop -r atop_20180710’

this will bring up a screen and you can toggle through the time intervals by using lowercase ‘T’ to move forward in time or Capital ‘T’ to go backward in time. - Once you find the time stamp you can

press – ‘c’ – full command-line per process to see which processes were running at that time stamp and you should be able to locate the id process from the kernel log

atop -r atop_20180710’

Example

- 3082 27% php

- 15338 27% php

- 26639 25% php

- 8520 8% php

- 8796 8% php

- 2157 8% /usr/sbin/apache2 -k start

- 11970 1% php – This is the process ID from the kernel log above and what appears to what was running. So we know it was a php script. Atop doesn’t always provide the exact script. However from the kernel log and this we can determine what was some type of rss feed. From this you can also see that it wasnt using very much CPU. This helps us determine that the php code is causing a memory leak and needs to be updated and or disabled.

- 10493 1% php

- 10942 1% php

- 5335 1% php

- 9964 0% php

Written by Nick Tailor

How to configure Ansible to manage Windows Hosts on Ubuntu 16.04

Note: This section assumes you already have ansible installed, working, active directory setup, and test windows host in communication with AD. Although its not needed to have AD. Its good practice for to have it all setup talking to each other for learning.

Setup

Now Ansible does not come with windows managing ability out of the box. Its is easier to setup on centos as the packages are better maintained on Redhat distros. However if you want to set it up on Ubuntu here is what you need to do.

Next Setup your /etc/krb5.conf

[logging]

default = FILE:/var/log/krb5libs.log

kdc = FILE:/var/log/krb5kdc.log

admin_server = FILE:/var/log/kadmind.log

[libdefaults]

default_realm = HOME.NICKTAILOR.COM

dns_lookup_realm = false

dns_lookup_kdc = false

ticket_lifetime = 24h

renew_lifetime = 7d

forwardable = true

[realms]

HOME.NICKTAILOR.COM = {

kdc = HOME.NICKTAILOR.COM

admin_server = HOME.NICKTAILOR.COM

}

[domain_realm]

.home.nicktailor.com = HOME.NICKTAILOR.COM

home.nicktailor.com = HOME.NICKTAILOR.COM

Test Kerberos

Run the following commands to test Kerberos:

kinit administrator@HOME.NICKTAILOR.COM <–make sure you do this exact case sensitive or your authenication will fail. Also the user has to have domain admin privileges.

You will be prompted for the administrator password klist

You should see a Kerberos KEYRING record.

[root@localhost win_playbooks]# klist

Ticket cache: FILE:/tmp/krb5cc_0Default principal: administrator@HOME.NICKTAILOR.COM

Valid starting Expires Service principal05/23/2018 14:20:50 05/24/2018 00:20:50 krbtgt/HOME.NICKTAILOR.COM@HOME.NICKTAILOR.COM renew until 05/30/2018 14:20:40

Configure Ansible

Ansible is complex and is sensitive to the environment. Troubleshooting an environment which has never initially worked is complex and confusing. We are going to configure Ansible with the least complex possible configuration. Once you have a working environment, you can make extensions and enhancements in small steps.

The core configuration of Ansible resides at /etc/ansible

We are only going to update two files for this exercise.

Update the Ansible Inventory file

Edit /etc/ansible/hosts and add:

[windows]

HOME.NICKTAILOR.COM

“[windows]” is a created group of servers called “windows”. In reality this should be named something more appropriate for a group which would have similar configurations, such as “Active Directory Servers”, or “Production Floor Windows 10 PCs”, etc.

Update the Ansible Group Variables for Windows

Ansible Group Variables are variable settings for a specific inventory group. In this case, we will create the group variables for the “windows” servers created in the /etc/ansible/hosts file.

Create /etc/ansible/group_vars/windows and add:

—

ansible_user: Administrator

ansible_password: Abcd1234

ansible_port: 5986

ansible_connection: winrm

ansible_winrm_server_cert_validation: ignore

This is a YAML configuration file, so make sure the first line is three dashes “‐‐‐”

Naturally change the Administrator password to the password for WinServer1.

For best practices, Ansible can encrypt this file into the Ansible Vault. This would prevent the password from being stored here in clear text. For this lab, we are attempting to keep the configuration as simple as possible. Naturally in production this would not be appropriate.

The powershell script must be run on the windows client in order for ansible to be table to talk to the host without issues.

Configure Windows Servers to Manage

To configure the Windows Server for remote management by Ansible requires a bit of work. Luckily the Ansible team has created a PowerShell script for this. Download this script from [here] to each Windows Server to manage and run this script as Administrator.

Loginto WinServer1 as Administrator, download ConfigureRemotingForAnsible.ps1 and run this PowerShell script without any parameters.Once this command has been run on the WinServer1, return to the Ansible1 Controller host.

Test Connectivity to the Windows Server

If all has gone well, we should be able to perform an Ansible PING test command. This command will simply connect to the remote WinServer1 server and report success or failure.

Type:

ansible windows -m win_ping

This command runs the Ansible module “win_ping” on every server in the “windows” inventory group.

Type: ansible windows -m setup to retrieve a complete configuration of Ansible environmental settings.

Type: ansible windows -c ipconfig

If this command is successful, the next steps will be to build Ansible playbooks to manage Windows Servers.

Managing Windows Servers with Playbooks

Let’s create some playbooks and test Ansible for real on Windows systems.

Create a folder on Ansible1 for the playbooks, YAML files, modules, scripts, etc. For these exercises we created a folder under /root called win_playbooks.

Ansible has some expectations on the directory structure where playbooks reside. Create the library and scripts folders for use later in this exercise.

Commands:

cd /root

mkdir win_playbooks

mkdir win_playbooks/library

mkdir win_playbooks/scripts

Create the first playbook example “netstate.yml”

The contents are:

– name: test cmd from win_command module

hosts: windows

tasks:

– name: run netstat and return Ethernet stats

win_command: netstat -e

register: netstat

– debug: var=netstat

This playbook does only one task, to connect to the servers in the Ansible inventory group “windows” and run the command netstat.exe -a and return the results.

To run this playbook, run this command on Ansible1:

Errors that I ran into

Now on ubuntu you might get some SSL error when trying to run a playbook. This is because the python libraries are trying to verify the self signed cert before opening a secure connection via https.

ansible windows -m win_ping

Wintestserver1 | UNREACHABLE! => {

“changed”: false,

“msg“: “ssl: 500 WinRMTransport. [SSL: CERTIFICATE_VERIFY_FAILED] certificate verify failed (_ssl.c:590)”,

“unreachable”: true

}

.

How you can get around the is update the python library to not care about looking for a valid cert and just open a secure connection.

Edit /usr/lib/python2.7/sitecustomize.py

——————–

import ssl

try:

_create_unverified_https_context = ssl._create_unverified_context

except AttributeError:

# Legacy Python that doesn’t verify HTTPS certificates by default

pass

else:

# Handle target environment that doesn’t support HTTPS verification

ssl._create_default_https_context = _create_unverified_https_context

——————————–

Then it should look like this

ansible windows -m win_ping

wintestserver1 | SUCCESS => {

“changed”: false,

“ping”: “pong”

}

Proxies and WSUS:

If you are using these you to disable proxies check on your host simply export

export no_proxy=127.0.0.1, winserver1, etc,

Or add a file in /etc/profile.d/whatever.sh

If you have WSUS configured you will need to check to see if there are updates from there or they will not show when the yaml searches for new updates.

Test windows updates yaml: The formatting is all wrong below so click on the link and it will have the proper formatted yaml for windows update.

—

– hosts: windows

gather_facts: no

tasks:

– name: Search Windows Updates

win_updates:

category_names:

– SecurityUpdates

– CriticalUpdates

– UpdateRollups

– Updates

state: searched

log_path: C:\ansible_wu.txt

– name: Install updates

win_updates:

category_names:

– SecurityUpdates

– CriticalUpdates

– UpdateRollups

– Updates

If it works properly the log file on the test host will have something like the following: C:\ansible_wu.txt

Logs show the update

2018-06-04 08:47:54Z Creating Windows Update session…

2018-06-04 08:47:54Z Create Windows Update searcher…

2018-06-04 08:47:54Z Search criteria: (IsInstalled = 0 AND CategoryIds contains ‘0FA1201D-4330-4FA8-8AE9-B877473B6441’) OR(IsInstalled = 0 AND CategoryIds contains ‘E6CF1350-C01B-414D-A61F-263D14D133B4′) OR(IsInstalled = 0 AND CategoryIds contains ’28BC880E-0592-4CBF-8F95-C79B17911D5F’) OR(IsInstalled = 0 AND CategoryIds contains ‘CD5FFD1E-E932-4E3A-BF74-18BF0B1BBD83’)

2018-06-04 08:47:54Z Searching for updates to install in category Ids 0FA1201D-4330-4FA8-8AE9-B877473B6441 E6CF1350-C01B-414D-A61F-263D14D133B4 28BC880E-0592-4CBF-8F95-C79B17911D5F CD5FFD1E-E932-4E3A-BF74-18BF0B1BBD83…

2018-06-04 08:48:33Z Found 2 updates

2018-06-04 08:48:33Z Creating update collection…

2018-06-04 08:48:33Z Adding update 67a00639-09a1-4c5f-83ff-394e7601fc03 – Security Update for Windows Server 2012 R2 (KB3161949)

2018-06-04 08:48:33Z Adding update ba0f75ff-19c3-4cbd-a3f3-ef5b5c0f88bf – Security Update for Windows Server 2012 R2 (KB3162343)

2018-06-04 08:48:33Z Calculating pre-install reboot requirement…

2018-06-04 08:48:33Z Check mode: exiting…

2018-06-04 08:48:33Z Return value:

{

“updates”: {

“67a00639-09a1-4c5f-83ff-394e7601fc03”: {

“title”: “Security Update for Windows Server 2012 R2 (KB3161949)”,

“id”: “67a00639-09a1-4c5f-83ff-394e7601fc03”,

“installed”: false,

“kb”: [

“3161949”

]

},

“ba0f75ff-19c3-4cbd-a3f3-ef5b5c0f88bf”: {

“title”: “Security Update for Windows Server 2012 R2 (KB3162343)”,

“id”: “ba0f75ff-19c3-4cbd-a3f3-ef5b5c0f88bf”,

“installed”: false,

“kb”: [

“3162343”

]

}

},

“found_update_count”: 2,

“changed”: false,

“reboot_required”: false,

“installed_update_count”: 0,

“filtered_updates”: {

}

}

Written By Nick Tailor

How to setup Anisble on Ubuntu 16.04

Installation

Type the following apt-get command or apt command:

$ sudo apt-get update

$ sudo apt-get upgrade

$ sudo apt-get install software-properties-common

Next add ppa:ansible/ansible to your system’s Software Source:

$ sudo apt-add-repository ppa:ansible/ansible

| Ansible is a radically simple IT automation platform that makes your applications and systems easier to deploy. Avoid writing scripts or custom code to deploy and update your applications— automate in a language that approaches plain English, using SSH, with no agents to install on remote systems.

http://ansible.com/ More info: https://launchpad.net/~ansible/+archive/ubuntu/ansible Press [ENTER] to continue or Ctrl-c to cancel adding it. gpg: keybox ‘/tmp/tmp6t9bsfxg/pubring.gpg’ created gpg: /tmp/tmp6t9bsfxg/trustdb.gpg: trustdb created gpg: key 93C4A3FD7BB9C367: public key “Launchpad PPA for Ansible, Inc.” imported gpg: Total number processed: 1 gpg: imported: 1 OK |

Update your repos:

$ sudo apt-get update

Sample outputs:

To install the latest version of ansible, enter:

| Ign:1 http://dl.google.com/linux/chrome/deb stable InRelease

Hit:2 http://dl.google.com/linux/chrome/deb stable Release Get:4 http://in.archive.ubuntu.com/ubuntu artful InRelease [237 kB] Hit:5 http://security.ubuntu.com/ubuntu artful-security InRelease Get:6 http://ppa.launchpad.net/ansible/ansible/ubuntu artful InRelease [15.9 kB] Get:7 http://ppa.launchpad.net/ansible/ansible/ubuntu artful/main amd64 Packages [560 B] Get:8 http://in.archive.ubuntu.com/ubuntu artful-updates InRelease [65.4 kB] Hit:9 http://in.archive.ubuntu.com/ubuntu artful-backports InRelease Get:10 http://ppa.launchpad.net/ansible/ansible/ubuntu artful/main i386 Packages [560 B] Get:11 http://ppa.launchpad.net/ansible/ansible/ubuntu artful/main Translation-en [340 B] Fetched 319 kB in 5s (62.3 kB/s) Reading package lists… Done |

$ sudo apt-get install ansible

Type the following command:Finding out Ansible version

$ ansible –version

Sample outputs:

| ansible 2.4.0.0

config file = /etc/ansible/ansible.cfg configured module search path = [u’/home/vivek/.ansible/plugins/modules’, u’/usr/share/ansible/plugins/modules’] ansible python module location = /usr/lib/python2.7/dist-packages/ansible executable location = /usr/bin/ansible python version = 2.7.14 (default, Sep 23 2017, 22:06:14) [GCC 7.2.0] |

Creating your hosts file

Ansible needs to know your remote server names or IP address. This information is stored in a file called hosts. The default is /etc/ansible/hosts. You can edit this one or create a new one in your $HOME directory:

$ sudo vi /etc/ansible/hosts

Or

$ vi $HOME/hosts

Append your server’s DNS or IP address:

[webservers]

server1.nicktailor.com

192.168.0.21

192.168.0.25

[devservers]

192.168.0.22

192.168.0.23

192.168.0.24

I have two groups. The first one named as webserver and other is called devservers.

Setting up ssh keys

You must configure ssh keys between your machine and remote servers specified in ~/hosts file:

$ ssh-keygen -t rsa -b 4096 -C “My ansisble key”

Use scp or ssh-copy-id command to copy your public key file (e.g., $HOME/.ssh/id_rsa.pub) to your account on the remote server/host:

$ ssh-copy-id -i $HOME/.ssh/id_rsa.pub root@server1.cyberciti.biz

$ ssh-copy-id -i $HOME/.ssh/id_rsa.pub root@192.168.0.22

$ ssh-copy-id -i $HOME/.ssh/id_rsa.pub root@192.168.0.23

$ ssh-copy-id -i $HOME/.ssh/id_rsa.pub root@192.168.0.24

$ eval $(ssh-agent)

$ ssh-add

Now ansible can talk to all remote servers using ssh command.

Send ping server to all servers

Just type the following command:

$ ansible -i ~/hosts -m ping all

Sample outputs:

192.168.0.22 | SUCCESS => {

“changed”: false,

“failed”: false,

“ping”: “pong”

}

192.168.0.23 | SUCCESS => {

“changed”: false,

“failed”: false,

“ping”: “pong”

}

192.168.0.24 | SUCCESS => {

“changed”: false,

“failed”: false,

“ping”: “pong”

}

Find out uptime for all hosts